The Mozilla Foundation has released a report on how YouTube’s video recommendation algorithm affects its users. Collecting stories in 2019 the final results can be found here [PDF]. 37,380 YouTube users contributed data to the report by way of Mozilla’s RegretsReporter browser extension.

Highlights

- YouTube Regrets are disparate and disturbing. Volunteers reported everything from Covid fear-mongering to political misinformation to wildly inappropriate “children’s” cartoons. The most frequent Regret categories are misinformation, violent or graphic content, hate speech, and spam/scams.

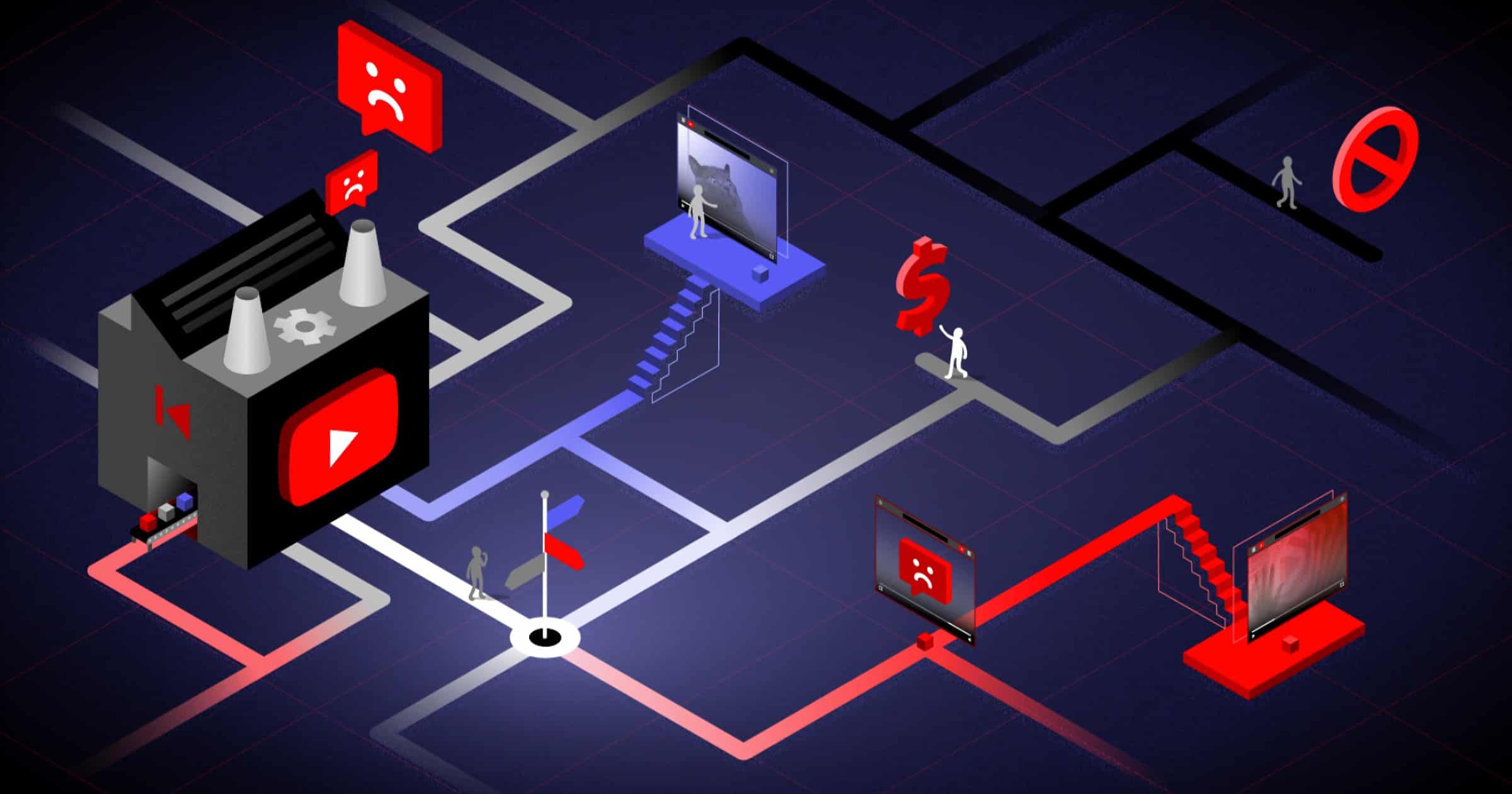

- The algorithm is the problem. 71% of all Regret reports came from videos recommended to the volunteers by YouTube’s automatic recommendation system. Further, recommended videos were 40% more likely to be reported by our volunteers than videos that they searched for. And in several cases, YouTube recommended videos that actually violate their own Community Guidelines and/or were unrelated to previous videos watched.

- Non-English speakers are hit the hardest. The rate of YouTube Regrets is 60% higher in countries that do not have English as a primary language (with Brazil, Germany and France being particularly high), and pandemic-related Regrets were especially prevalent in non-English languages.

Also, a notable quote: “We gave no specific guidance on what these stories should be about; submissions were self-identified as regrettable. Since then, Mozilla has intentionally steered away from strictly defining what “counts” as a YouTube Regret, in order to allow people to define the full spectrum of bad experiences that they have on YouTube.”