During its Unleashed event on October 18, 2021, Apple announced new M1 chipsets with increased Unified Memory. The first M1-based Macs could handle up to 16GB of memory. The new M1 Pro can handle up to 32GB of Unified Memory, while the M1 Max doubles that to 64GB. On the surface, that seems like a very modest amount of memory. When you look at how Apple’s Unified Memory Architecture really works, though, it is actually fairly impressive.

CPU vs Graphics Memory

With most PCs, the CPU and graphics memory are two separate chips. While there is the concept of unified memory in other platforms, you’ll usually still see the graphics memory and CPU memory as discrete chips.

The CPU memory, or system RAM, is usually comprised of “sticks” that plug into your computer’s motherboard. Alternatively, the RAM could be soldered in place on the motherboard. The point is, the system, or CPU, memory is discrete from the processor, meaning access speed is reduced. Your data has to travel along the circuitry of the motherboard before it can get to the processor.

Graphics memory is somewhat different. With a discrete graphics chipset, the memory is soldered onto the printed circuit board (PCB). It’s still separated from the GPU, however, and data has to travel along the circuitry of the PCB, taking time.

How Does Apple’s Unified Memory Architecture Change That?

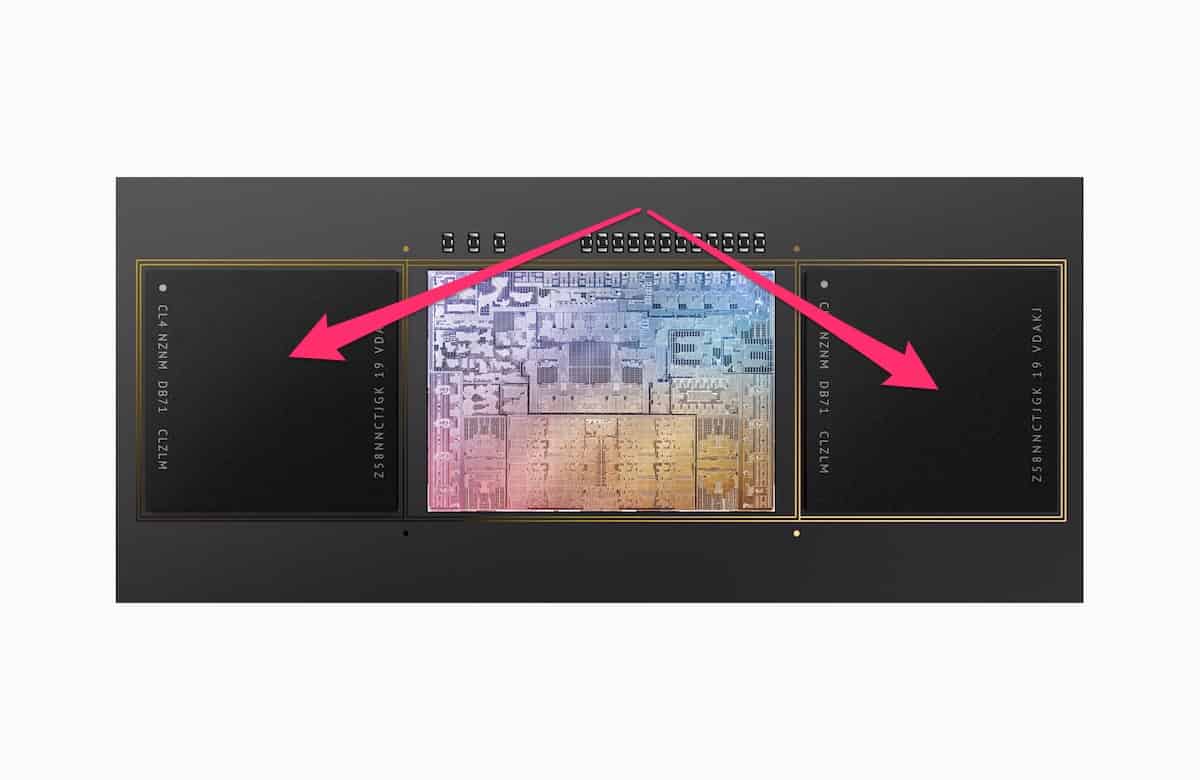

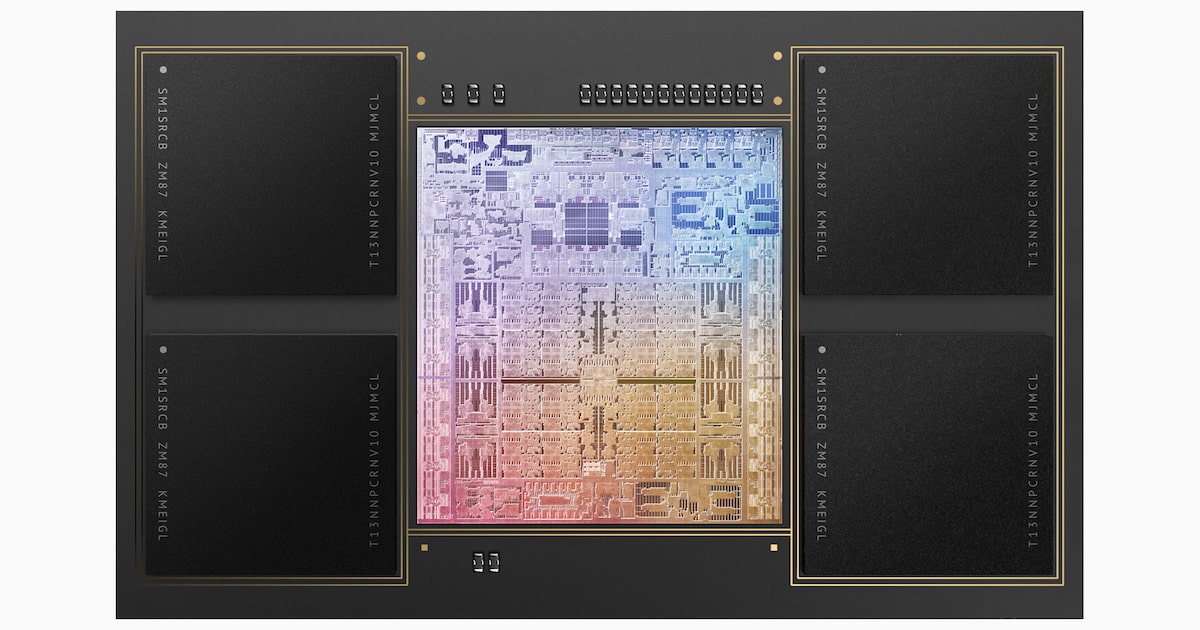

In Apple’s implementation of unified memory, the available RAM is on the M1 system-on-a-chip (SoC). While it’s not located within the processor itself, it’s still part of the same silicon seated to the side of the other fundamental components.

What this means is that there is less space the data has to travel to get to either the CPU or the GPU. This results in faster memory bandwidth for both the CPU and the graphics card. Here’s why.

When you are processing a video in Final Cut Pro, for example, the CPU gets all the instructions for your import or export first. It offloads the data needed by the GPU, which typically uses its own built-in memory. Once the GPU is finished, it has to transmit the results to the CPU.

With Apple’s UMA, your CPU and graphics processor share a common pool of memory. Since the GPU and CPU are using that common pool of memory, the CPU doesn’t have to send data anywhere. It just tells the GPU, grab the stuff from this memory address and do your thing.

Once the GPU is done, all it has to do is let the CPU know. There’s no need to transfer the processed video from graphics memory to system memory or anywhere else. It just tells the CPU, “The stuff at memory address 0x00000000 is ready for you.”

More Memory and Faster Performance

The new M1 Pro MacBook Pros are available with up to 32GB of unified memory. The memory bandwidth blazes at up to 200GB/s, “enabling creatives like 3D artists and game developers to do more on the go than ever before”.

On the M1 Max, all of that is doubled. You can configure your MacBook Pro with up to 64GB of unified memory, and its memory bandwidth is 400GB/s.

Compare that with Intel’s theoretical maximum memory bandwidth for its Core X-Series processors: 94GB/s. Apple’s Unified Memory Architecture is a clear winner here, providing memory bandwidth at least twice as fast as Intel currently supports.

The moment you realize that the M1 Max chip’s 400GBs of memory bandwidth is almost as fast as the PlayStation 5’s 448GBs. So we’re basically getting a PS5 in a laptop. pic.twitter.com/2DxtIN0nwo

— Vadim Yuryev (@VadimYuryev) October 18, 2021

In another comparison, Twitter user @VadimYuryev pointed out the PlayStation 5’s memory bandwidth is 448 GB/s. You’re getting next-gen video game console memory bandwidth in a laptop, basically.

Tip of the hat to How-To Geek’s Ian Paul for his excellent primer on Apple’s Unified Memory Architecture.

Jeff:

A lucid and tidy summary.

And all this at a fraction of the energy consumption, providing longer battery life for laptop.

For many tech observers, both professional and lay, it was obvious that Apple’s bet on SoC was going to be a ‘game-changer’, to use that hackneyed but apposite phrase. Having seen its test drive in the 2018 iPad Pro line up (the M1 is essentially spec-bumped version of the same chipset), and its successful rollout in the initial Mac lineup, it was obvious that the industry would follow, and it is.

I still think that other under-appreciated death-knells to the X86 chipset include the superior capability and performance of machine learning on the SoC, as well as novel security protocols, which Apple were able to field-test over several years on the iPad and iPhone systems prior to rollout on the Mac.

I also think, in retrospect, it is easier to appreciate Apple’s roadmap to getting their consumer base to accept pre-configured devices, which have been the subject of user complaint particularly on ‘pro’ devices, which will no longer be an option with SoC powered devices. Pre-configured devices are new standard.

Thanks for the summary.